Infosec » History » Revision 9

« Previous |

Revision 9/10

(diff)

| Next »

Shuvam Misra, 05/02/2019 06:18 PM

Information security policies and processes¶

- Table of contents

- Information security policies and processes

- The big picture

- Measure 1: Monitoring and logging measures

- Measure 2: Tighten identification and authentication

- Measure 3: defence in depth

- Measure 4: the time dimension

- Measure 5: securing the end-user computers

- Recurring processes

- Design of a centralised log repository

- Design of an authorisation management module

- Another sample of recommendations

These policies and processes will apply to most modern information systems today. This document assumes that (many) servers will be cloud-based, and users will use mobile phone apps and browser-based interfaces to access applications.

Use this document as a repository of structured thoughts and ideas, from which you may extract content to create security policy and process documents for new projects or applications.

The big picture¶

Perimeter security¶

For much of human history, the classical security model was a fort, with high walls and moat, and all treasures securely stored behind the fort walls. This model may be referred to as perimeter security, where security depends on keeping the bad guy outside the fort walls at all costs. This model was applied to information security in the initial days of large information systems. The most visible symbol of this model is the firewall. In this model, once you break through a firewall, you can do a lot of damage.

This evolved into "defence in depth" strategies, where a single line separating "inside" from "outside" gave way to multiple layers of progressively greater security, much like the construction of an onion.

Today, the idea of perimeter security, if applied to information systems, needs to be far more nuanced for it to remain relevant. This is because trusted insiders access secure systems and assets over untrusted public networks, and use untrusted devices (e.g. personal mobile phones and laptops) to access these assets. This gives intruders new attack vectors.

Threats today are related to

- intrusion attempts by complete outsiders: the classical perimeter security use-case

- difficulty in authenticating the user: is he who he claims to be?

- the possibility of intruders exploiting channels opened for legitimate users

Your legitimate users now live outside your fort, in unknown villages, and we need to let them access secured assets inside the fort, while keeping intruders out.

Insiders as threats¶

If actors are categorised into four categories, in the context of a bank:

- unauthorised outsiders: is a complete stranger, unknown to the bank

- authorised outsiders: is a customer of, or vendor/ supplier to, the bank, with only limited and well defined privileges when dealing with the bank

- unauthorised insiders: is a clerk who is not authorised to perform a specific operation

- authorised insiders: is a clerk who is authorised to perform a certain operation

then authorised insiders are perpetrators for between 67% and 75% of security incidents, depending on who you ask. This has not changed in 20+ years. This goes totally against the popular perception of the security intruder being an unknown stranger wearing a hoodie, sitting in a dark room in an obscure corner of the world.

This Security Intelligence report starts with

"CISOs and their teams have suspected it for years, but new security

breach research showed that nearly three-quarters of incidents are

due to insider threats."

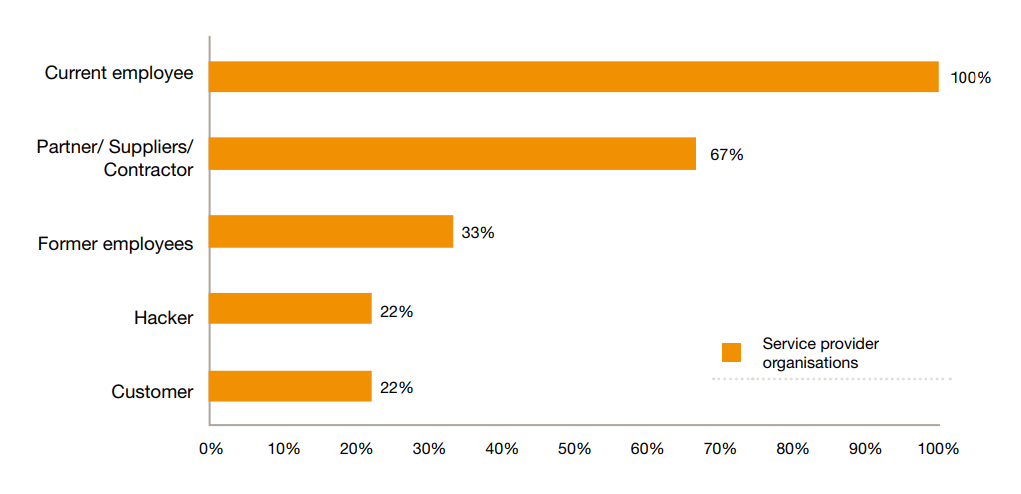

A PwC and DSCI report, circa 2011 has this interesting chart:

which shows, in relative terms, what fraction of security incidents have been perpetrated by authorised insiders of an organisation. Once again, the most common perpetrator is the authorised insider.

Perimeter security completely fails to address insider threats.

Responding to the modern threat scenario¶

The entire strategy for securing of information assets then shifts from checking "Stop! Who goes there?" (the premise of perimeter security) to "Are you trying to do something unusual or suspicious?" (the basic approach of monitoring, recording and reacting).

Therefore, the only way to compensate for this changed scenario is to monitor, record and analyse everything users do with secure assets. In other words, if you can't keep the main gate closed, fit CCTV cameras everywhere, both inside and outside the fort, and respond to any threat perceptions as fast as possible. If necessary, close the main gate temporarily when you see any reason to suspect that an unauthorised operation is being attempted or (even) may be attempted in the near future. This may deny service temporarily to innocent authorised users, but this is often the only way to protect secure assets in the current scenario.

What shall we do?¶

We need to combine a set of measures and deploy every measure at every possible point and level, with the hope that the combination of measures will keep out all but the most tenacious of intrusion attempts. In slightly more concrete terms, we will do the following:

- Measure 1: we will monitor and log everything

- Measure 2: we will take every step to ensure that intruders cannot masquerade as legitimate users

- Measure 3: we will implement defence in depth, by implementing a computerised version of the "need to know" principle

- Measure 4: we will implement time limits on access privileges wherever possible, to minimise the window of exposure

- Measure 5: we will make the work environments as secure as possible

This list of strategies will now be expanded and developed into a set of concrete measures, documented below.

Measure 1: Monitoring and logging measures¶

We will monitor and log everything, and if possible, throw pattern detection algorithms at the logged data to detect "unusual" patterns. In this context, "unusual" implies "suspicious".

A centralised log repository¶

The information systems (there are many) must all log all normal user activities in a central log repository. This log repository must have the following characteristics:

- the data must be structured into fields, so that at least some degree of

selectoperation is possible. Text files make for useless logs for everything other than defence in court cases. - the data must be in a database, to allow quick retrieval of random subsets (again, a fast

selectoperation). Text files make for useless logs. - the data must be in one place, otherwise, after the first security incident, vendors will sell you products costing hundreds of thousands of dollars simply to aggregate disparate logs

- the data must be stored using systems which do not allow application code to modify or delete entries even if a programmer tries to do so

- When off-the-shelf applications are part of the system, e.g. MS Dynamics or Oracle Financials, then the proprietary logs of such applications must be imported into the central log repository using connectors or log-import utility tools, which must run automatically at pre-set times.

The design of this logging module is documented separately. Application code must include a few lines of code at the right places to log events into the centralised repository.

Log geo-IP of all remote endpoints¶

Whenever any remote device, e.g. a mobile app or browser, connects to the central information systems, the IP address of that remote device must be logged, and its geo-IP parameters must be logged too. At the very least, the country, city, and ISP must be logged. These geo-IP parameters must of course be included in logs in the central log repository above.

I have had good experience using Maxmind. Other services are available too.

Use tcpdump/ Wireshark¶

Install a packet dumping and network traffic analysis tool on all servers which act as gateways or have valuable information assets. These tools may not be activated at their highest levels to log all possible traffic (the tools can be configured to collect data to various degree of detail, culminating in the extreme case where each byte of each incoming and outgoing packet can be logged), and their logs may be retained by default for just a few days, but they must be kept active, so that network traffic for the last day or two may be examined closely as soon as there is any sign of suspicious activity.

Measure 2: Tighten identification and authentication¶

The problem with simple things like user account management is that it's often too difficult for the operator to do a good job easily. So he leaves loopholes, which are innocuous from the point of view of system usage, but are dangerous from the point of view of security. The commonest problem is dead accounts -- accounts which should have been deleted but have not been.

Single global user directory¶

The entire collection of information systems must be integrated to refer to a single user directory for authoritative information about users and their security credentials. This directory is typically an OpenLDAP or MS Active Directory system. All user accounts must be created in just this directory, and the authoritative copy of the user's password must be applied to this record. This data may be auto-replicated to other directories, but all copies must be replicas -- none of the replicas must be maintained or edited by manual operations.

See this page for a design of a central authorisation module.

Password strength¶

Today, passwords are not more secure because they have combinations of upper case, lower case, digits, etc. There is not much relevance for "unguessable" passwords, because passwords are no longer guessed by humans -- they are subjected to brute force attack, using botnets if necessary. Therefore, the only type of password which is (relatively) more resistant to such attacks is a longer password, not an unguessable one.

Therefore, the password hygiene policy enforced on all users must demand

- a mix of all sorts of characters to make it "unguessable"

- a minimum length of at least, say, 20 characters

- no substring of the password must be a dictionary word or a sequence of digits (e.g. "1234")

The minimum length is really the most important attribute to make the password more secure.

Two factor authentication¶

Support for two-factor authentication is mandatory. This may not be demanded for all operations, but the support must be provided for operations where security is deemed more critical.

The simplest way to implement this is by using an SMS OTP to a registered mobile number. A more reliable method may use an app like the Google Authenticator, though this may involve development costs.

Physical location and endpoint device tracking¶

A simple way to detect possible identity masquerading attempts is by checking the physical location of the user. A user in Dubai should not have his identity misused by an intruder sitting in Dar Es Salaam simply because the password has been leaked or broken. The fact that the actual user usually logs in from Dubai while the intruder is logging in from Dar Es Salaam should be sufficient information to block, or at least report, this intrusion attempt.

To enable such detection and blockage, the physical location of the user must be tracked and correlated against every access attempt. This can be done using geo-IP mapping of the user's usual accesses in the past.

A similar mapping must be maintained of the endpoint devices each user typically uses, and then report, or block, attempts to login from a device which has not been seen before for this user. If the user usually uses an iPhone and Safari on a MacBook to access the systems, then an attempt by (ostensibly) the same user to access from a OnePlus mobile device should be reported or blocked.

In other words, the typical physical locations and the endpoint devices for a user are to be treated as part of the user's identity. If accesses from the endpoint device are over HTTP, then the HTTP_AGENT field in each HTTP request contains enough information to identify both browser and OS platform. This Perl module parses the HTTP_AGENT string and lets your code just get a ready-made identification of browser type and OS platform; there are similar libraries and modules in other programming languages. One such module must be used to parse the string and use the inferred data to track the user's behaviour profile.

The authoritative data about physical locations and endpoint devices may be gathered during successful login attempts done using two-factor authentication, and this data must be compared against current location and current endpoint device type for every access to every Internet-facing service, including access by mobile apps to web services. The impact of this security measure is lost if current data is cross-checked against authoritative data only for login attempts.

Tie HR processes with user account management¶

User accounts should not be created, deactivated, or deleted by system operators of the IT department. These operations must be performed by the HR department, through a workflow involving a maker-checker process and a requisition-and-sanction workflow.

A small software module must be built for user management, which must have the following workflow steps:

- an officer requests for a user account to be created, giving

- details of the person

- the duration for which this account is needed (e.g. temporary accounts may be for just a few days)

- another officer with authorization privileges must approve this request

- someone from the HR team must fill in any additional details needed and click the "Create account" button

- the account should then be created (including insertion of a record in the Active Directory or LDAP, etc) without further human intervention.

Similar workflow steps must be followed for user account deactivation and deletion.

This is the only way to ensure that dead accounts are not created in the system by the IT Dept for various "system related" or "testing related" reasons and left lying there.

These features must be implemented as part of the central authorisation module described elsewhere.

Measure 3: defence in depth¶

These measures are a sophisticated version of perimeter security, converted into a multi-layered security architecture.

Firewalls wherever possible¶

This is self-explanatory. Firewalls are needed

- at the gateway for each office, and

- at gateway points for all server clusters hosted in cloud infrastructure

The word "firewall" here means anything which improves gateway security by inspecting and sometimes blocking traffic. Therefore "firewall" here includes IDS and web application firewalls too. All those appliances may be deployed as found fit. I am deliberately not getting into details of what needs to be done -- gateway security is a well understood subject.

IP address based access rules¶

Access rules must used IP addresses, wherever IP addresses are static.

Linux (and most probably other) operating system kernels support IP packet filtering features which allow low level filtering of network packets on

(srcIP, srcport, destIP, destport)

This filtering, being implemented at a fairly low level in the kernel, is (relatively) impervious to subversion. Therefore, this feature should be configured for all servers which communicate for any purpose with counterparties which have fixed IP addresses. For instance, a server may have an HTTP service which accepts connections from any possible remote IP address, but a data-transfer SSH service which communicates only with two other servers. In such a situation, at least the SSH service must be protected by using IP packet filtering rules which restrict traffic on port 22 (the SSH port) to only two remote IP addresses.

This must be done on each server, for each service for which such rules may be made to apply.

Authorisation based on source location and device¶

Access to secure operations may be restricted to certain types of devices and certain physical locations, based on their IP addresses. For instance, a financial accounting system may allow filing of expense claims from any remote IP address, but editing of bank account details of counterparties only from desktop-based browsers connecting from the office LAN of the main offices.

Support for such differential access rules must be implemented wherever possible. This dramatically reduces the attack surface for intruders.

Geo-IP based access rules¶

Is there much likelihood that officers of the organisation will need to access secure information assets from locations in the Republic of Cote d'Ivoire, or East Timor? Since the answer is "no", such accessed must be prevented.

Geo-IP mapping allows an information system to locate the physical location of the remote endpoint, and this may be used to reduce the attack surface for the system.

It should be mandatory that all information systems in the organisation use a centralised access management system, managed from an administrative panel, which controls the list of countries and (optionally) cities from which remote connections will be accepted.

It is strongly recommended that this control be extended to individual user accounts at a finer granularity, so that a specific officer operating out of the Democratic Republic of Timor Leste or Macau Special Administrative Region may get access to the systems, but access from these countries will be barred for all other users.

Track remote IP address reputation¶

Not all IP addresses are equal. Internet banking systems, online credit card billing gateways, and other online systems have been making use of these insights for at least two decades.

The following types of disreputable IP addresses are tracked by security-conscious systems:

- IP addresses from which financial frauds have been attempted in the past

- IP addresses of VPN service providers which permit users to access the Internet by hiding their true origin IP address

- IP addresses of HTTP anonymisers, which are publicly run HTTP proxy servers, operating with the general aim of hiding the origin IP address

- IP addresses listed as heavy generators of email spam

There are commercially available databases of such IP addresses, and these lists are updated frequently. Shalla's Blacklists and MaxMind minFraud IP Score are just two examples. There are better lists. There is a community of email spam blacklists, which may be used to identify email spamming IP addresses.

The information systems must block all access attempts from IP addresses with poor reputation.

Rate throttling¶

If accesses are hitting an Internet facing service too fast from a specific IP address or subnet, then these accesses may be rate throttled or deliberately slowed down.

This is an effective method to "choke" an intruder using automated tools to try to break into a system.

This must be implemented by all Internet-facing services, where the exact parameters for the threshold to trigger rate throttling must be configurable.

Application vulnerability assessment¶

Internet-facing application software interfaces (web screens, web services, etc) are often insecure. Cross-site scripting, SQL injection, are all quite common vulnerabilities of application software interfaces.

Vulnerability assessment audits must be carried out for all Internet-facing interfaces of the systems being built.

Key based VPN for external access¶

Access to internal services and information assets must be permitted only through a VPN. Authentication for the VPN connection must be through two-factor authentication or public-key based keys, not by passwords alone. Passwords alone are no longer secure for any Internet-facing interfaces.

Digital Data Rooms (DDR)¶

The mechanism to build the next level of security for information assets, specially while collaborating.

TO BE DETAILED OUT.

Measure 4: the time dimension¶

Temporary user accounts¶

The system must support the creation of user accounts with expiry dates. If the account itself does not self-delete after expiry, the password must auto-reset to an unknown and unguessable string.

Expiry dates for authorisation rules¶

Authorisation rules must support the expiry date attribute. It is accepted that most authorisation rules will be permanent, but every system acquires some authorisation rules over time which were meant to be temporary but were left behind as no one remembered to delete them when their purpose was served.

Out-of-office notifications, leave¶

All out-of-office notification for email systems should be inserted through a small layer of custom software, which inserts the notification message in the email server but also makes a record of the start and end date in a security logging table. This information will be used by security monitoring systems to monitor activities in that user account during the out-of-office period. Misuse of accounts for absent users is a common pattern which usually goes undetected.

The same mechanism must be built into the software through which an employee logs his leave requests. The period of leave must be used by the security monitoring system to scrutinise very closely all activities done by that user.

Monitor idle accounts¶

Accounts which have seen no login and no activity for more than X days must be monitored very closely, and all activities of such accounts must be treated as suspicious. It is a common pattern that intruders will misuse an account lying idle for a few months. Accounts of employees on sabbatical or maternity/ paternity leave are common victims.

All accounts which have seen no activity for more than X days must be reported to system administrators and the HR team in a separate report, perhaps once a week, to bring dead accounts to the attention of managers.

Measure 5: securing the end-user computers¶

Patches and AV¶

All operating systems, specially of desktops and laptops, must be maintained up-to-date with the latest security patches and bug fix updates applied. This must be done

- within seven days of the patches and updates being released by the OS maintainer, for desktops, laptops and mobile devices

- at least once a quarter, for servers

All desktops and laptops must keep their malware filters active, and the malware signatures updated, at all times.

No admin rights to users¶

Users of company-owned laptops and desktops must not be given administrator privileges on the computers assigned to them. Such computers must have a "root account" or administrator account, with its password known only to the IT Dept's support engineers, and a non-privileged ordinary user account to be used by the human user for his work.

Most intrusion attempts and malware infection of a desktop system succeeds because the user is using the computer as an administrator.

Ad blockers on browsers¶

The most common vectors for transmitting malware to desktops and laptops today are

- online ads which have malicious code in Javascript (see this Tripwire article)

- email which has links which the unsuspecting recipient clicks, downloading malware

Email with malware links is (we hope) being filtered by spam filters and malware filters. Attempts to click on a link to a malware-laden page will (we hope) be blocked by a UTM device in the office which will have a list of blacklisted or suspicious URLs. The remaining threat vector is online ads.

Therefore, it is mandatory that all browsers on all desktops and laptops must run instances of an adware filter like Adblock Plus. Such ad blockers have three benefits:

- they make the browsing experience less irritating, because ads are blocked

- they save a significant chunk of Internet bandwidth for the premises, because studies have shown that ads account for 25-33% of all web browsing bandwidth.

- they make the computer secure by blocking the most common vector for malware transmission.

Outgoing email volume tracking per computer¶

The volume of outgoing email (in terms of number of messages) being generated by each individual desktop or laptop must be tracked and monitored.

One of the commonest uses for a compromised desktop or laptop is to send spam. All such computers being used for spam generation can be detected by monitoring for unusually high outgoing SMTP traffic. The volume of traffic from an infected computer is several times that of the average volume in the organisation.

Such computers must be detected and taken down immediately, to remove the malware infection.

Recurring processes¶

The following is a brief, likely incomplete, list of ongoing processes which must be executed as per a calendar to maintain security of information assets.

- Inspect all log entries since the past working day, priority critical or higher. Frequency: daily

- Once a pattern identification system has been developed to process the log entries in the central log repository, inspect the report of anomalous or suspicious behaviour generated by this system. Frequency: daily

- Extract all data from backups into a clean system and manually examine the data through random sampling to ensure that there is no known discrepancy between expected and observed data. Frequency: twice a year

- Ensure that OS updates and malware filter signatures are up-to-date. Block non-compliant desktops and laptops from accessing the information systems. (There are security products which may be installed on every computer to enforce such blockages.) Use a centralised patch management system to track update delivery to each computer and monitor which computers have not installed pending updates for more than, say, X days. Frequency: monthly

- Conduct an automated vulnerability scan of the office networks and cloud-based information systems by running an automated scanning program like Nessus or Saint. Frequency: twice a year

- Take a printout of the list of active user accounts in the system, and review this with the HR team to try to identify dead accounts. Frequency: twice a year

- Take a printout of all idle accounts in the system and review the list to see whether any of the idle accounts may be removed. Frequency: quarterly

- Get an external agency to conduct an application vulnerability assessment and penetration testing of all the interfaces of the information systems, specially the newly modified or extended modules. Frequency: once a year

- Get external audit teams to conduct ISO 27000 audit of the entire information systems. Frequency: once every two years

Design of a centralised log repository¶

Design notes are given here

Design of an authorisation management module¶

Design notes are given here

Another sample of recommendations¶

Attached is a PDF file containing security recommendations for a browser based business application. The application or organisation is not important -- the security recommendations are valid for most modern business applications which have Internet interfaces.

Updated by Shuvam Misra almost 7 years ago · 9 revisions